Rendering Sonic in HTML (not canvas)

This project is insane. That is on purpose. Its a Sonic engine, written completely from scratch, rendered in HTML. Yes, Sonic is a <DIV> box, styled and animated with CSS. I only started this project to stress test a web framework I created (curvature), and to stress test my knowledge of document rendering and the browser in general. Things went very well, so I may have gotten a bit extreme with this “test project.”

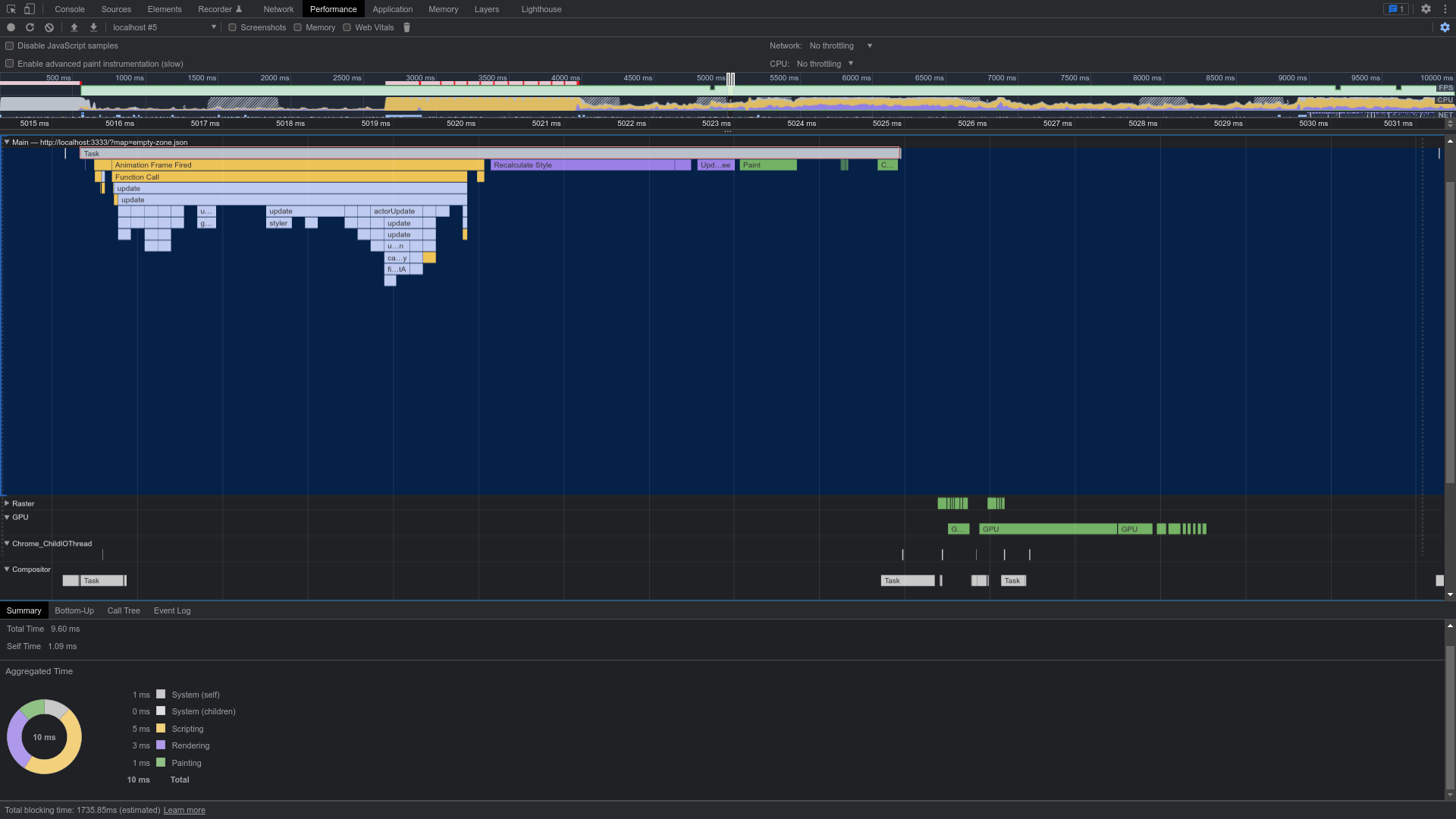

When normal people want to make a browser game, they’ll use canvas, and usually Web GL, allowing the developer to make use of the GPU. However, CSS also allows for access to the GPU. Once I'd realized the performance level allowed for simple games to be expressed, I started wondering: “How far can I really take this?”

I have been developing web pages since I was a child. I had my first website in 1998. It listed Pokemon cheats, and it looked terrible. Ever since then, I’d wondered if you could do a Sonic game in HTML. Eventually, I learned about JavaScript and before I’d become a teenager I wrote the idea off as completely insane and probably impossible.

Then hardware accelerated CSS came out. I spent time learning it, and over the years the browsers improved, and I kept at it. I wrote my own web framework for JavaScript and needed a project to use as a stress test. So I thought “What could be better at stressing the browser than taking a Sonic game and making everything in it an HTML element?”

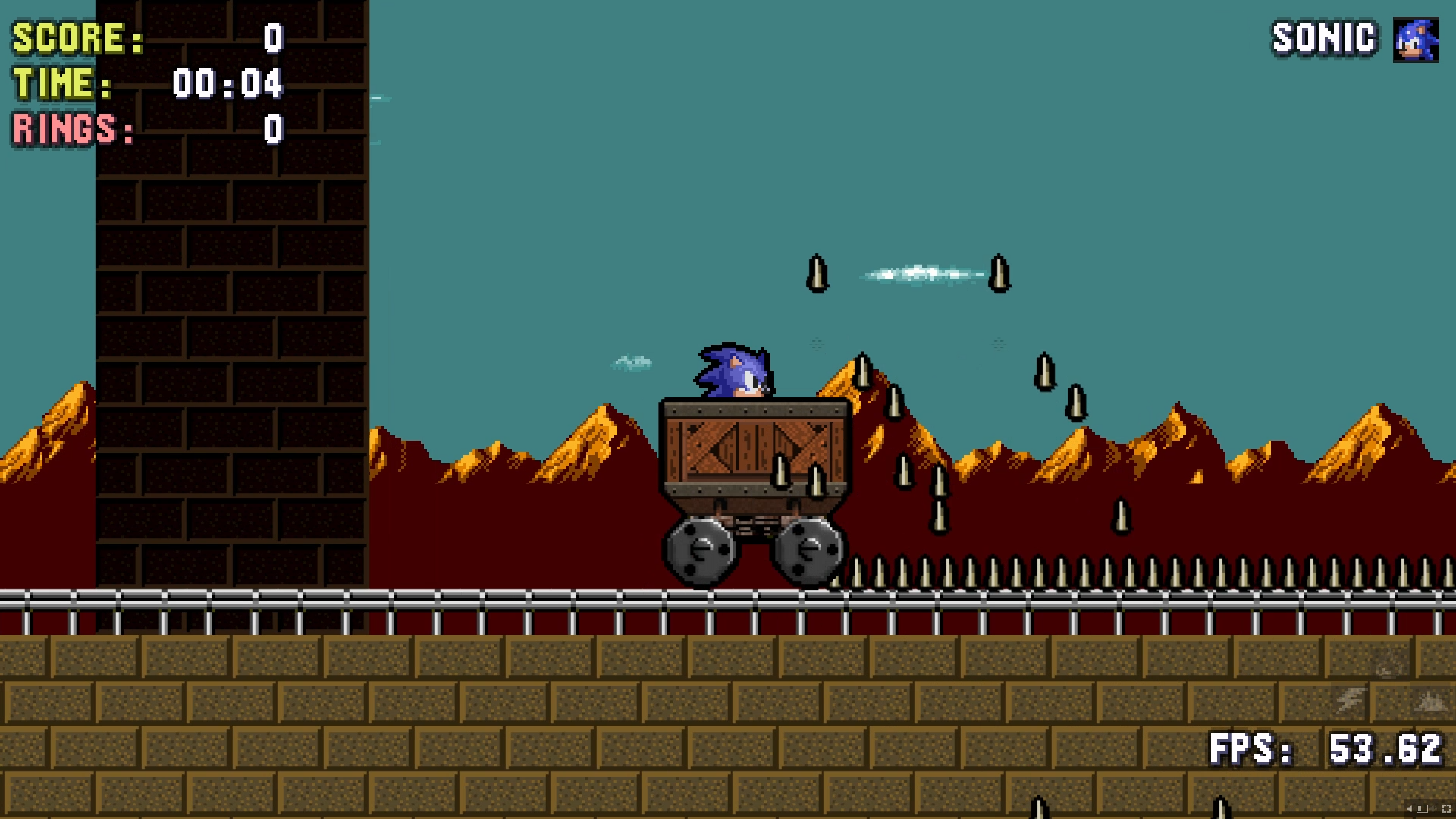

So I created Sonic 3000 (the game) and Pixel Physics (the engine). The goal was originally to clone the 8 bit games, that were released for the hand-held GameGear. The reason was that they were a little simpler and much less graphically intense than the mainline Genesis games. Once I got the physics right and saw how it ran, I immediately decided not only to try to match the Genesis games, but attempt to build on them. I believe I’ve succeeded at that.

You can view the project live at https://pixel-physics.seanmorr.is/. It runs best in Chrome, and I strongly encourage you to open the inspector and poke around the document.

Graphics

Each backdrop is a single HTML element. The parallax depth effect is generated by cutting the background image into a series of transparent PNGs. They’re applied to the element with a multi-valued background-image style. They have a background-repeat set to repeat=x. The background-image-x style is also multi-valued, where each image’s x value is set to a a decimal multiplied by custom property (--x) using CSS calc. When this one, single variable is modified, each strip moves by that amount, times its decimal. Thus we can scroll the background horizontally with a depth effect.

Since PNGs are transparent, they can be overlaid. This not only allows one element to appear to be in front of another, but also allows the background-color to shine through. By transitioning the background color through blues, oranges, purples and grays, different times of day can be simulated, changing while the background scrolls with parallax.

In Seaview Park Zone, all the layers in the background are literally just the background of one, single HTML element. That includes the stars, the clouds, the island and all their reflections in the water. It was tedious work ensuring all the strips of clouds had the same parallax factor, but worth it for the depth effect. The lightning in the second half is done by rapidly transitioning the background color, which will show where the clouds are semitransparent

Objects are just DIV boxes. They’re animated in a similar way to backdrops, but they only use one image at a time. Graphics for objects are organized into “sprite sheets” with each frame of animation evenly spaced, and each animation on its own row. With the appropriate CSS @keyframe definitions and selectors, I can change the animation by altering the data-animation attribute on the element.

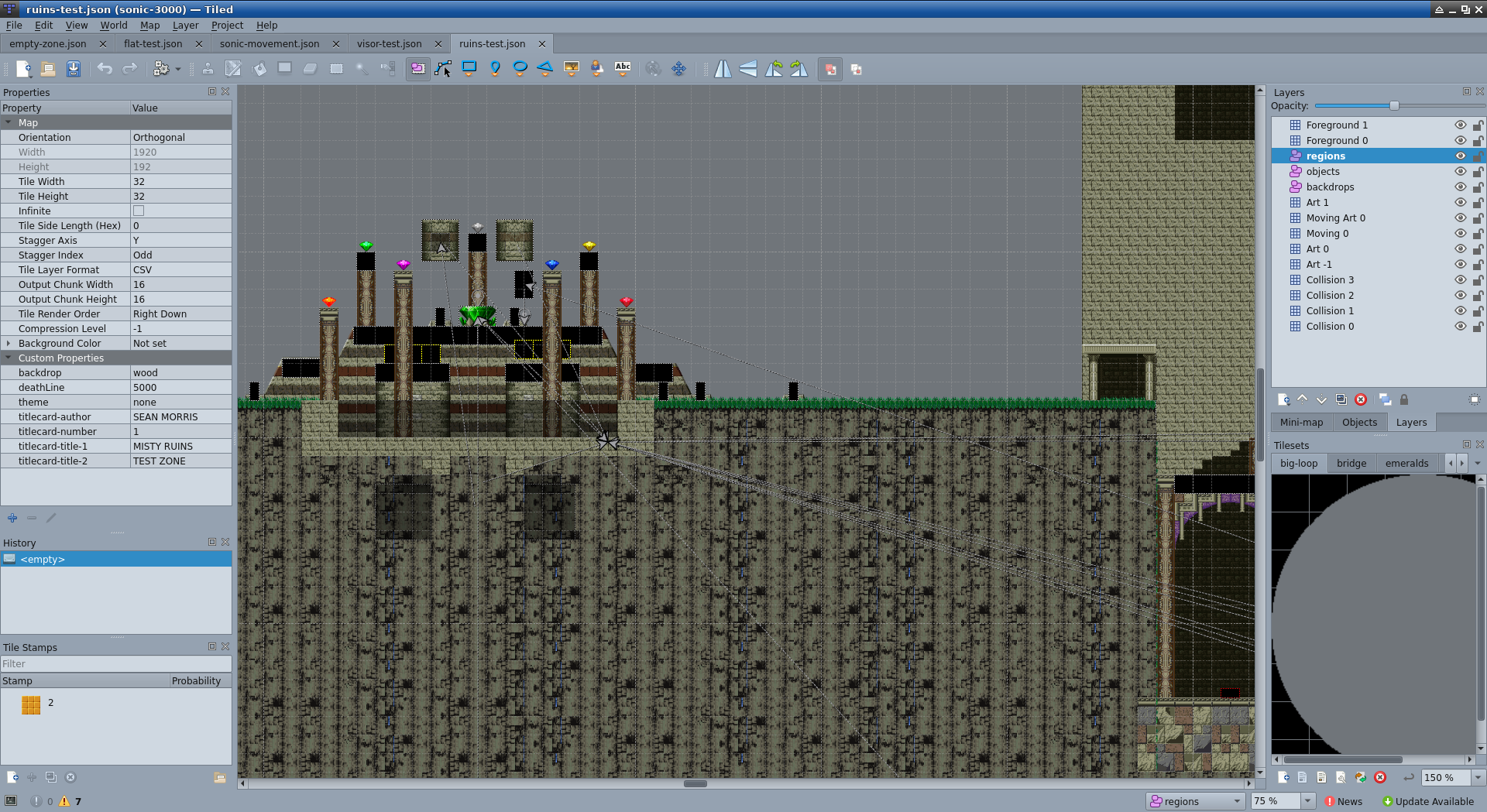

Tiles and objects are laid out graphically in Tiled, which is a dedicated editor for tile-based maps. Tile based mapping is convenient for multiple reasons: Its fast, compact, efficient and its what the genesis games used. With this method its very easy to write a speedy algorithm to cycle them into place and mimic the style of the original games.

SVG

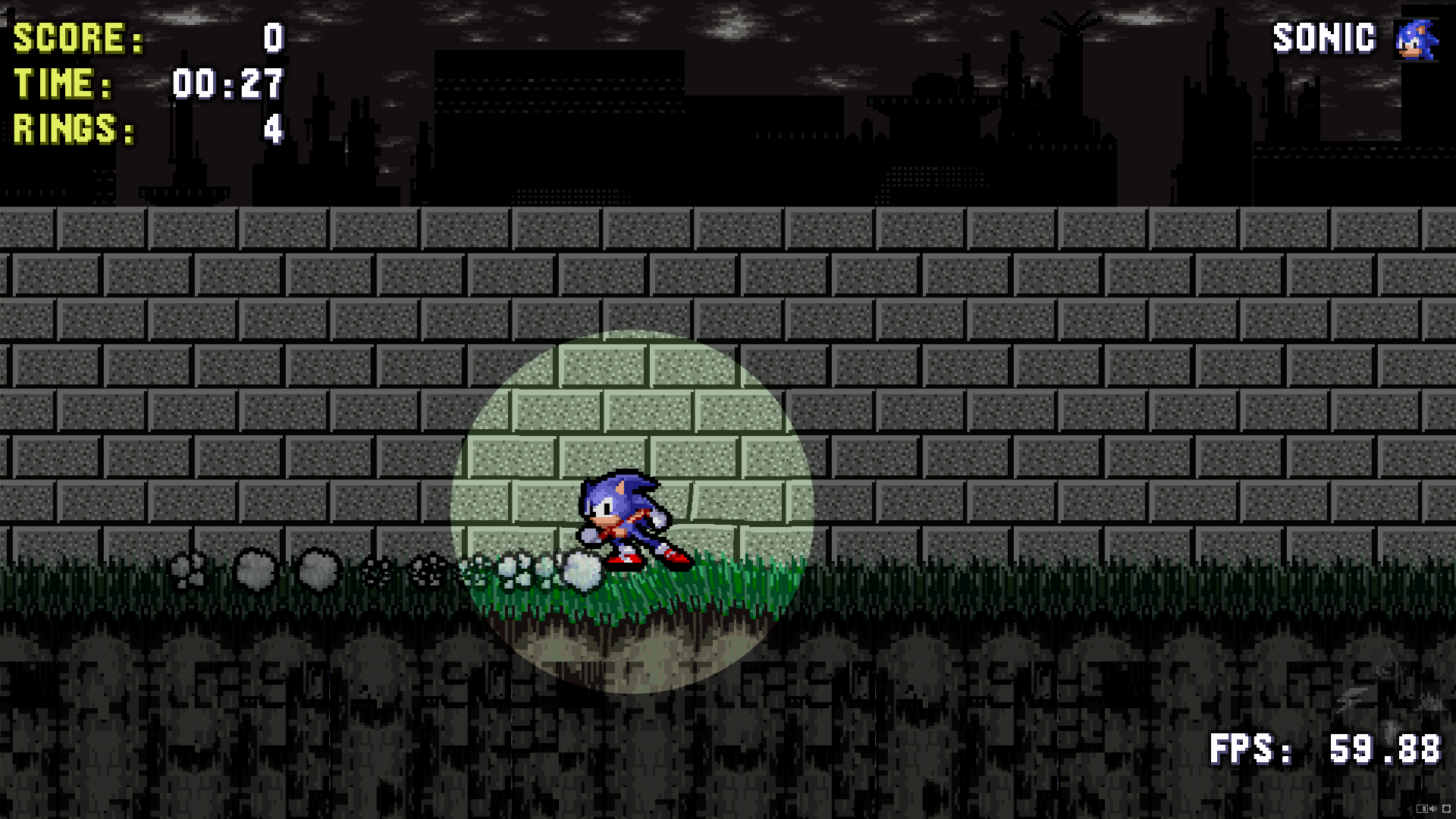

SVG is used in a lot of different ways in this project, which is relatively unexpected since 16 bit Genesis games used raster graphics, and SVG is generally concerned with vector images. However, it came in handy with its clip-path to generate a simple spotlight effect.

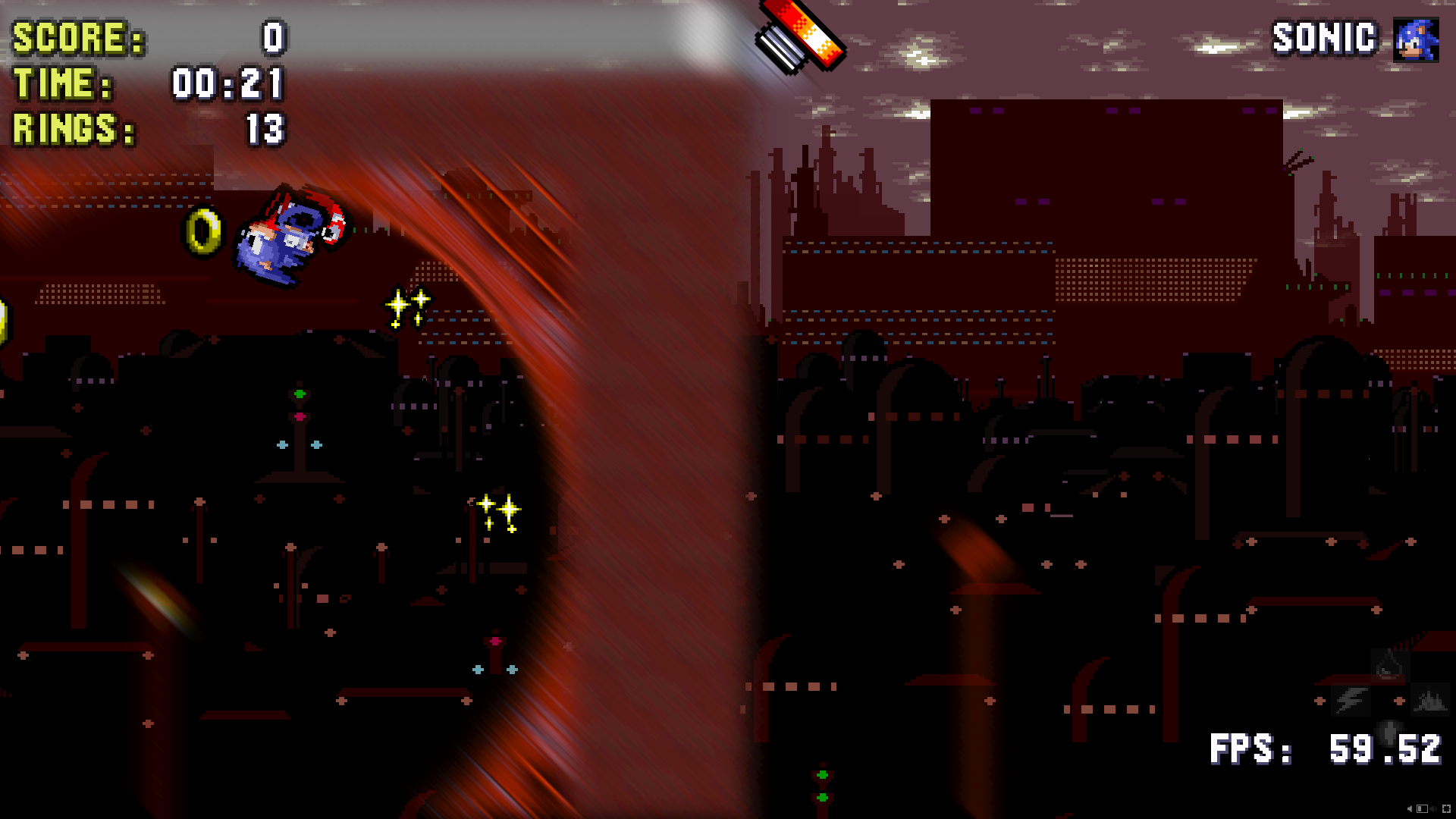

Even more powerfully, its able to generate displacement effects using color maps, which I used to create the wave effect for underwater areas. You’ll notice that although Sonic’s sprite is a single HTML element, the effect is only applied to the area with the water region. You can also see displacement effects when Sonic is skidding or charging a spindash, when you double tap the jump button and perform a doublespin, or when he’s standing on a bridge.

The directional motion blur effect is something I’m especially proud of. Directional blur in HTML/CSS is supposed to be impossible. You only get one blur() filter in CSS, and it’s omnidirectional (all directions at once). SVG takes it one step further by allowing for a blur-x and blur-y, but applying both of these equally will not be equivalent to a 45-degree motion blur. It works by nesting the entire tile layer in an element that is rotated so that the desired direction is displayed as horizontal. Another nested element applies a simple blur-x, and a third nested element cancels out the original rotation. Thus allowing for an ‘impossible’ blur effect. The blur is applied only to the ground and not the actual game objects as a courtesy to the player.

The most satisfying part here is that all of these SVG based effects can be applied to any normal HTML element.

PNG

To correctly cycle the colors of Hypersonic’s pallet while animations run, I’d need to use an additional 8 sprite sheets, one for each color, and white as a transitional state. While this would have been a workable solution, I didn’t find it acceptable. Rather than re-draw the entire thing using canvas, I decided to use a PNG with indexed color. I opened up the PNG as raw bytes in JavaScript, located the pallet data and replaced the colors there. This way I only need to change one byte for each color I want to replace. Once I’ve done that I can throw the resulting bytes into a blob, which I can take the URL for and use it in CSS with a transition on background-image to cycle the sprite sheets while animations are playing. This can be applied to any sprite in the system, so long as its using a PNG with indexed colors.

This all happens in real time when the users presses the button to transform:

Physics

Solid ground is defined by an array of tiles stored in groups in PNG images. Transparent pixels are empty and pixels with any opacity are solid. The source image is sampled with a canvas, and when each pixel is tested its solidity is cached. If the same tile is encountered elsewhere on the map, the cache is used.

The slope of the ground is determined by sampling a few pixels ahead and behind the player’s current position. To prevent jarring changes, the value is averaged with the values from the last few frames. This list is cleared if Sonic jumps or otherwise leaves the ground. When Sonic lands, this angle is used to calculate how much of his vertical momentum should be translated into horizontal momentum.

A 2d ray is cast along the direction of motion whenever an object moves. It length is equivalent to the object’s speed. If any of these pixels are solid, the object stops. If another object is on that pixel, the objects collide. This ensures the system won’t miss collision when objects are moving at high speeds. When Sonic lands, A few more rays are cast to determine the shape of the ground, and then his momentum is altered accordingly.

Layers & Loops

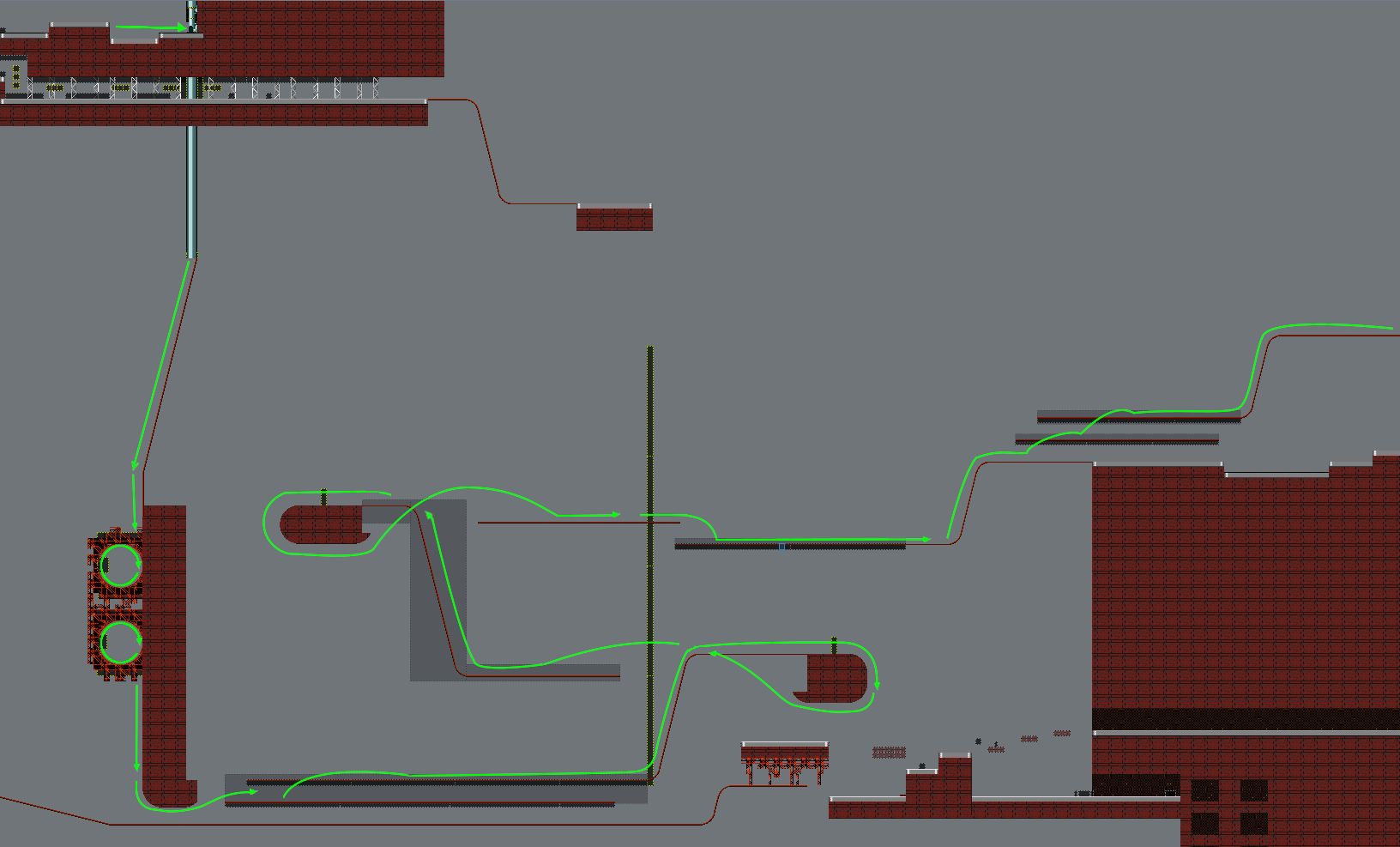

When running, or moving through the air, the player may encounter invisible 'layer switching' objects. This will 'move' the player to layer 1 or 2. If there is a solid tile in the player's path on that layer, there will be a collision. To keep things orderly, the player will always collide with solid tiles on layer 0. This allows a loop to be split across layers 1 and 2, and along with the layer-switching objects, the player can seamlessly traverse a loop.

Layer-switching can be used for more than just loops. Exploiting other aspects of the physics engine, along with creative layer-switch placement can allow for complex, branching pathways to be constructed around the level.

Input

To ensure users can make use of whatever input method they prefer, the system uses the HTML5 gamepad API. All input feeds into an abstract controller, where all input is mapped to either a button or an axis. Keyboard keys may also be mapped to buttons or axes within the abstract controller to unify all input. Buttons may also be mapped to axes so the d-pad and left stick function in similar ways. Digital buttons will have discrete 0 or 1 for their pressed property. Analog buttons will have a value in the interval between 0 and 1, inclusive. Axes will have a value between -1 and 1. Button press and release times are tracked, and can be compared to the current time to determine press times, hold times and release delays. This allows the game to detect things like quick double-taps.

If the controller supports rumble, the game will detect that automatically. Not only does the browser allow the game to vibrate the controller, it actually allows allows one to specify a specific type if vibration to play. Try hitting a spring or using the bounce attack from the bubble shield. You’ll notice a markedly different feeling from the two.

Menus & Text

The menu system makes use of the above input model to shift focus from one element to another around the page. CSS selectors are used to show which element is currently active, and the browser’s tendency to scroll that element to center upon focus is exploited to create a scrolling menu.

The character select in the “single player” menu is little more than a select box with some decorative trim. The silhouette above is actually rendered with the same graphics used for the player in-game. It has a CSS filter applied to remove the details. This ensures that the player graphic is loaded before any level starts. The settings menu is implemented in a simpler way, also with select boxes. The outline width input is simply an <INPUT> with its type set to number.

The system will also detect if the player is using a playstation, xbox, or generic gamepad vs the keyboard and display appropriate graphics when referring to controls. The system has a miniature font engine built in. It works by rendering some text as a series of <SPAN> tags, one for each character. Normal text uses the CSS sprite-sheet system, like game objects, but the elements are not animated.

Buttons are referred to by the unicode characters ⓿, ❶, ❷ and so on in the source text. You can see this live by highlighting the in-game prompts, they're normal HTML text. The dpad/arrow keys can be referred to as a whole with ✚, and specific directions use ← → ↑ ↓. These are rendered as <SPAN>s with a background image determined in CSS, or simply fall back to the letter of the keyboard key they are mapped to if the keyboard is being used (this is also an image, the original glyph has color:transparent; applied). All that is required to switch the display from one control scheme to another is to change a single CSS class on a parent element. All this results in strings of text that are rendered with images but still are selectable like any other text on a webpage.

Analytics

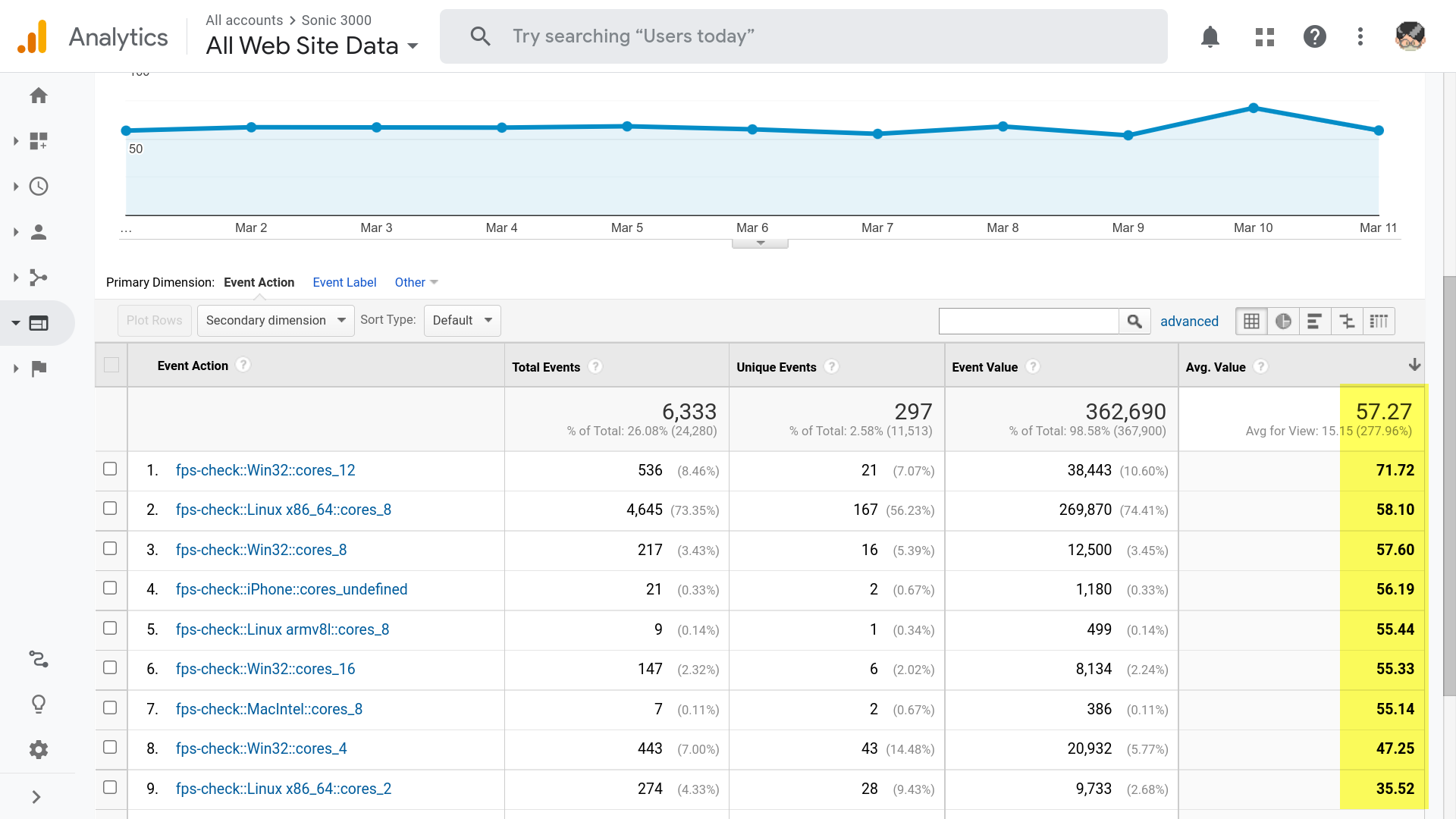

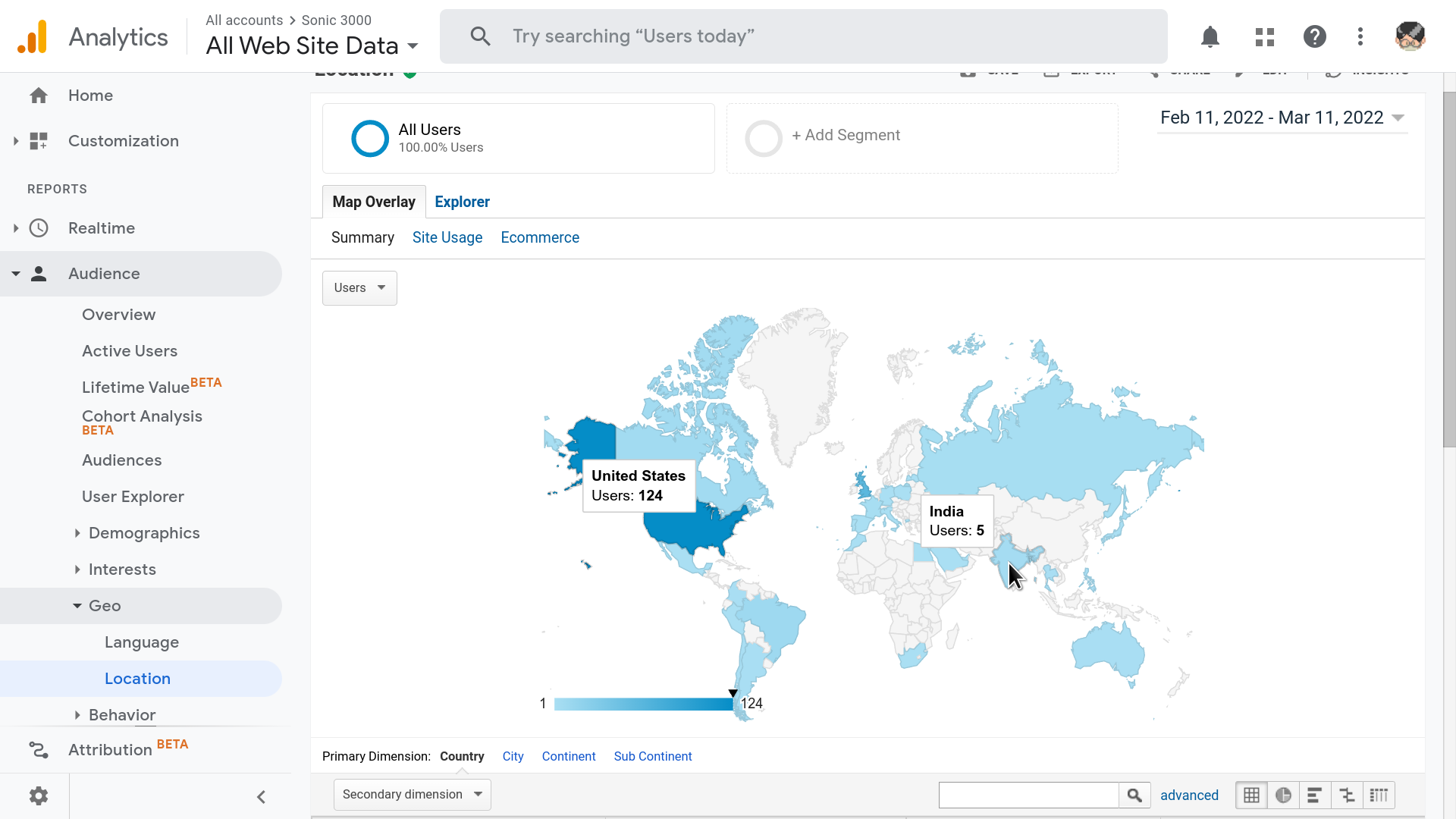

Like most web pages, the game makes use of Google Analytics. I was able to get a pretty high level of detail from it. I can see in real time when a user starts a level, connects a game controller, takes damage, and even whenever a ring is collected. The game reports all this information via GA Events. It also logs and transmits the current framerate after every 10 seconds of gameplay, so I can take statistics and ensure my optimizations actually improve things.

Networking

Online games are enabled through webrtc. It allows one user’s browser to connect directly to a peer’s and once that’s done they’re able to exchange messages. The game simply packages up a few relevant variables from the player object and sends them to the peer which syncs them and does the same.

The simulations are kept in sync by passing the frame id with each message. If the receiver is behind, their frame id is updated to the one in the message and any timeouts based on frames are called immediately before the simulation resumes. Objects with periodic motion like moving platforms will base their current position on a sine-like function applied to the frame id, keeping them in sync between clients.

Audio Subsystem

A "stack" of multuple HTML audio elements is maintained, with the one in the last position being played. If its not set to loop, once its done it will be removed and the one behind it will start. This allows for power ups to temporarily change the music, or even to stack. If an overridden tracks's powerup expires before the track has resumed, it can be removed from the stack early.

The Javascript will also check the MP3 for an id3v2 header. These appear in a surprisingly convenient format, right at the start of the MP3, if present. If such a header is found, the game will display the track name and artist in the botton left. The underlying hander emits events when the music changes, so the overarching system can easily trigger such alerts at relevent moments.

Sound effects are loaded and managed in a similar way, however they are allowed to play concurrently. Each sample is mapped to a pool of HTML Audio objects. If a request to play a sample is made, the system will first look for any objects in the pool that are not currently playing. If none are found, it will select the one that is the furthest along to restart. Before I began pooling objects, each on-screen object maintained its own Audio instances. This was unexpectedly expensive, and I got a decent performance boost once I began pooling pregenerated instances.

Idle Demo / Attract Mode

The system can record inputs and object states, index them to frames, and then play them back using a lot of the same code from the networking layer. This can be used to play a demo when the system is left idle on the title screen for long enough, as a sort of 'attract mode,' although the its triggered manually in the current implementation via the pause menu (under "demos.")

Closing

I would not have taken things to such an extreme degree had they not gone so well. I did have to apply some optimizations back to the original framework and the benefits were massive. I originally set out to prove it works fast, and I think I've done that. I learned a lot doing this project, and I’ve got an engine I can abstract out and share with other developers. I plan to abstract out the font, input, menu, PNG manipulation and SVG effect code into their own libraries and publish them on NPM. I also plan to submit Sonic 3000 to SAGE 2022 (Sonic Amateur Games Expo), and hopefully one day other developers will be able to use the underlying engine to do the same.